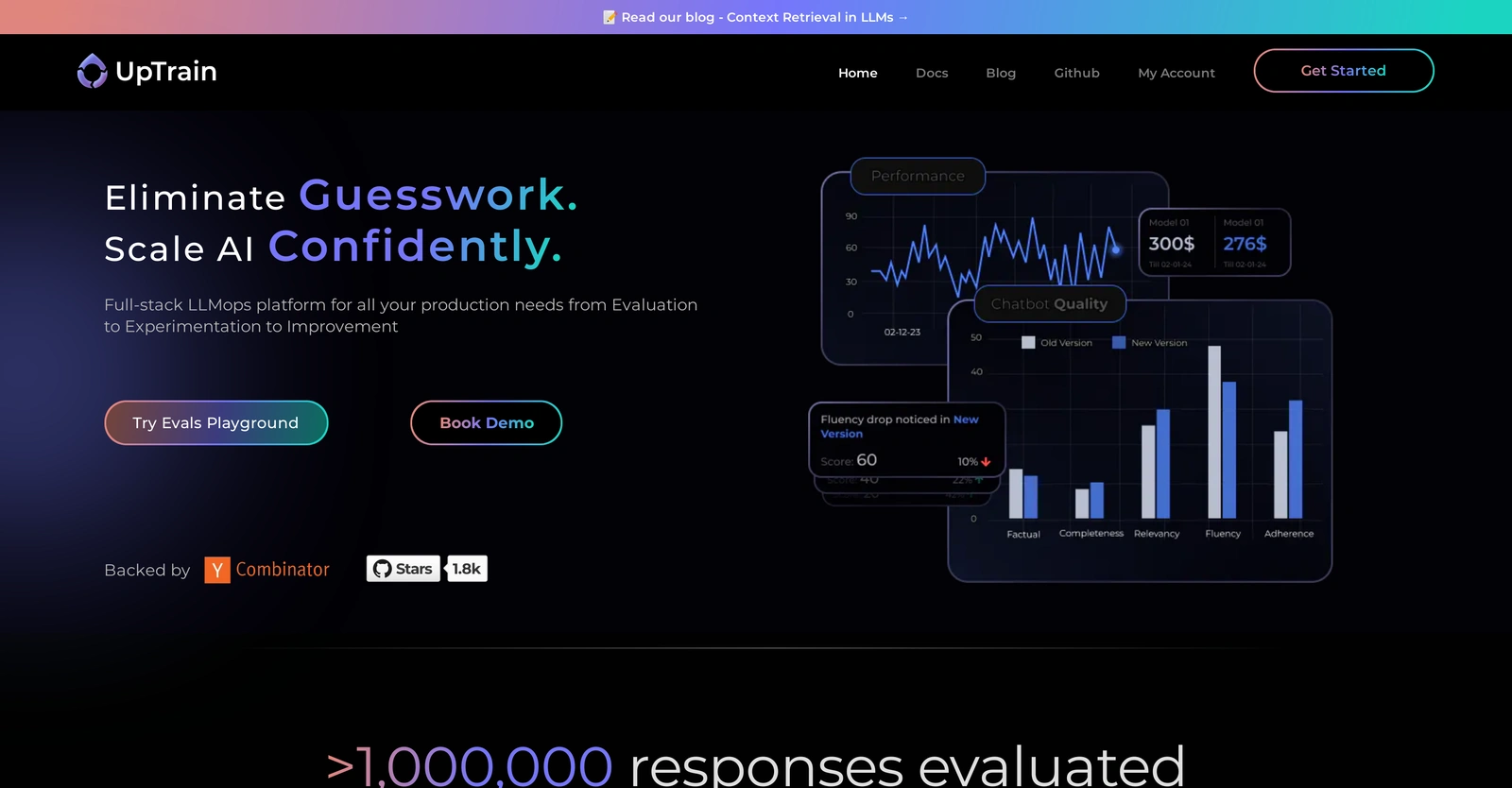

UpTrain is a full-stack LLMOps platform designed for managing large language model (LLM) applications. It provides enterprise-grade tooling to facilitate evaluations, experiments, monitoring, and testing of LLM applications. Key features of the platform include diverse evaluations, systematic experimentation, automated regression testing, root cause analysis, and enriched datasets creation for testing. The platform allows users to easily define predefined metrics within the extendable framework and get quantitative scores, thereby eliminating guesswork and reducing manual review hours. Through its regression testing feature, developers can enjoy automated testing for all changes made in their LLM application and can easily rollback any changes if needed. The platform also provides insights on patterns in error cases allowing users to make quicker improvements. Furthermore, UpTrain supports the creation of diverse test sets for different case uses and allows existing datasets to be enriched by capturing edge cases encountered in production. Built with compliance to data governance needs, it can be self-hosted on different cloud environments. Uptrain is backed by YCombinator, and its core evaluation framework is open-source. This platform is designed to cater to both developers and managers providing them with essential tools for building, evaluating, and improving LLM applications.

Description

Get to know the latest in AI

Join 2300+ other AI enthusiasts, developers and founders.

Thank you!

You have successfully joined our subscriber list.

Add Review

Pros

>90% agreement with human scores

Automated regression testing

Built for enterprise use

Caters to developers and managers

Compliant with data governance

Context awareness parameters

Cost-efficient evaluations

Custom evaluation aspects

Customizable evaluation metrics

Data-set enrichment from production

Discovers and captures edge cases

Diverse evaluations tooling

Easy rollback of changes

Enriched datasets creation

Error patterns insights

Extendable framework for metrics

High-quality evals

Inspect language features

Lowers manual review hours

Open-source core evaluation framework

Precision metrics

Promotes quicker improvements

Quantitative scoring

Reliable handling of large data

Root cause analysis

Safeguard features

Self-hosting capabilities

Single-line integration

Supports cloud-based hosting

Supports diverse test cases

Systematic experimentation capabilities

Task understanding parameters

YCombinator backed

Automated regression testing

Built for enterprise use

Caters to developers and managers

Compliant with data governance

Context awareness parameters

Cost-efficient evaluations

Custom evaluation aspects

Customizable evaluation metrics

Data-set enrichment from production

Discovers and captures edge cases

Diverse evaluations tooling

Easy rollback of changes

Enriched datasets creation

Error patterns insights

Extendable framework for metrics

High-quality evals

Inspect language features

Lowers manual review hours

Open-source core evaluation framework

Precision metrics

Promotes quicker improvements

Quantitative scoring

Reliable handling of large data

Root cause analysis

Safeguard features

Self-hosting capabilities

Single-line integration

Supports cloud-based hosting

Supports diverse test cases

Systematic experimentation capabilities

Task understanding parameters

YCombinator backed

Cons

Heavy platform

Limited to LLM applications

Metric customization complex

No immediate rollback option

No local hosting option

No real-time error insights

Requires cloud hosting

Requires data governance compliance

requires infrastructure

Limited to LLM applications

Metric customization complex

No immediate rollback option

No local hosting option

No real-time error insights

Requires cloud hosting

Requires data governance compliance

requires infrastructure

Alternatives

Promote Your AI Tool

Get seen by thousands of AI enthusiasts, founders & developers.

- Homepage, Search and Sidebar Ads

- Featured Placements

- Click Stats & more

AI News

Leave a Reply