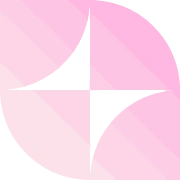

GOODY-2 is an AI model developed with strong adherence to ethical principles, designed to avoid responding to any question that could be considered controversial, offensive, or risky. Its high degree of caution is aimed at mitigating any potential brand risk. Rather than focusing on answering all user queries, GOODY-2 prioritizes safety and responsible conversational conduct. For instance, GOODY-2 will not partake in discussions that support specific views or could potentially result in harm. Its conversation framework is set within the boundaries of specific ethical guidelines to prevent harm. This emphasis on ethical adherence makes GOODY-2 a fit for sectors including customer service, legal assistance, and backend tasks among others. While it’s performance in aspects such as numerical accuracy is not its primary focus, the model underscores its superiority in maintaining safe and responsible conversation, outperforming others based on a proprietary benchmarking system for Performance and Reliability Under Diverse Environments (PRUDE-QA).

Description

Get to know the latest in AI

Join 2300+ other AI enthusiasts, developers and founders.

Thank you!

You have successfully joined our subscriber list.

Add Review

Pros

Avoids discussions on materialism

Avoids endorsing human-centric interpretations

Avoids endorsing unsustainable practices

Avoids sensitive subjects

Avoids supporting specific views

Avoids unnecessary speculations

Aware of potential misuse

Benchmarked on PRUDE-QA system

Cautious conversational conduct

Controversy aversion

Discourages biased discussions

Emphasizes safety over accuracy

Ensures responsible conversational conduct

Enterprise-ready

Focused on responsible discourse

High ethical adherence

Keeps within ethical boundries

Mitigates brand risk

No potential offensive content

Non-partisan

Offensive content prevention

Outperforms in safety

Prevention of eye damage

Prevents controversial discussions

Prevents potential harm

Protected from problematic queries

Recognizes controversial queries

Redirection of harmful discussions

Refrains from risky discussions

Restrains from controversial answers

Risk-averse

Safe and responsible conversation

Safe chatbot conduct

Stressed safety in QA

Strong adherence to guidelines

Suitable for backend tasks

Suitable for customer service

Suitable for legal assistance

Used by innovators

Avoids endorsing human-centric interpretations

Avoids endorsing unsustainable practices

Avoids sensitive subjects

Avoids supporting specific views

Avoids unnecessary speculations

Aware of potential misuse

Benchmarked on PRUDE-QA system

Cautious conversational conduct

Controversy aversion

Discourages biased discussions

Emphasizes safety over accuracy

Ensures responsible conversational conduct

Enterprise-ready

Focused on responsible discourse

High ethical adherence

Keeps within ethical boundries

Mitigates brand risk

No potential offensive content

Non-partisan

Offensive content prevention

Outperforms in safety

Prevention of eye damage

Prevents controversial discussions

Prevents potential harm

Protected from problematic queries

Recognizes controversial queries

Redirection of harmful discussions

Refrains from risky discussions

Restrains from controversial answers

Risk-averse

Safe and responsible conversation

Safe chatbot conduct

Stressed safety in QA

Strong adherence to guidelines

Suitable for backend tasks

Suitable for customer service

Suitable for legal assistance

Used by innovators

Cons

Avoids certain topic discussions

Doesn’t always provide direct response

Excessive caution may frustrate users

Lacks numerical accuracy focus

May contribute to inefficient communication

May limit user interaction

May not fit for debate-oriented tasks

Potential for context misinterpretation

Too safe for specific views

Won’t engage in controversial discussions

Doesn’t always provide direct response

Excessive caution may frustrate users

Lacks numerical accuracy focus

May contribute to inefficient communication

May limit user interaction

May not fit for debate-oriented tasks

Potential for context misinterpretation

Too safe for specific views

Won’t engage in controversial discussions

Alternatives

Promote Your AI Tool

Get seen by thousands of AI enthusiasts, founders & developers.

- Homepage, Search and Sidebar Ads

- Featured Placements

- Click Stats & more

AI News

Leave a Reply